Running local LLM on your computer

Local LLM

The idea of running large language models (LLM) like GPT4 on a personal computer sounds so cool. Think of ChatGPT but without internet or sending data to third party. I tried this a few months back with a simpler model called LLaVA 1.5. Here are step-by-step instructions for Windows. This information is widely available on the internet. See this blog post 1 and github instructions 2

Head over to llamafile github repository

Download latest llamafile (currently it’s llamafile-0.6.2)

Rename the file by adding exe to the end. E.g. llamafile-0.6.2.exe

Download the model you want to run. LLaVA 1.5 is a relatively small model of size 3.97 GB. Here’s the link to download llava-v1.5-7b-q4.llamafile

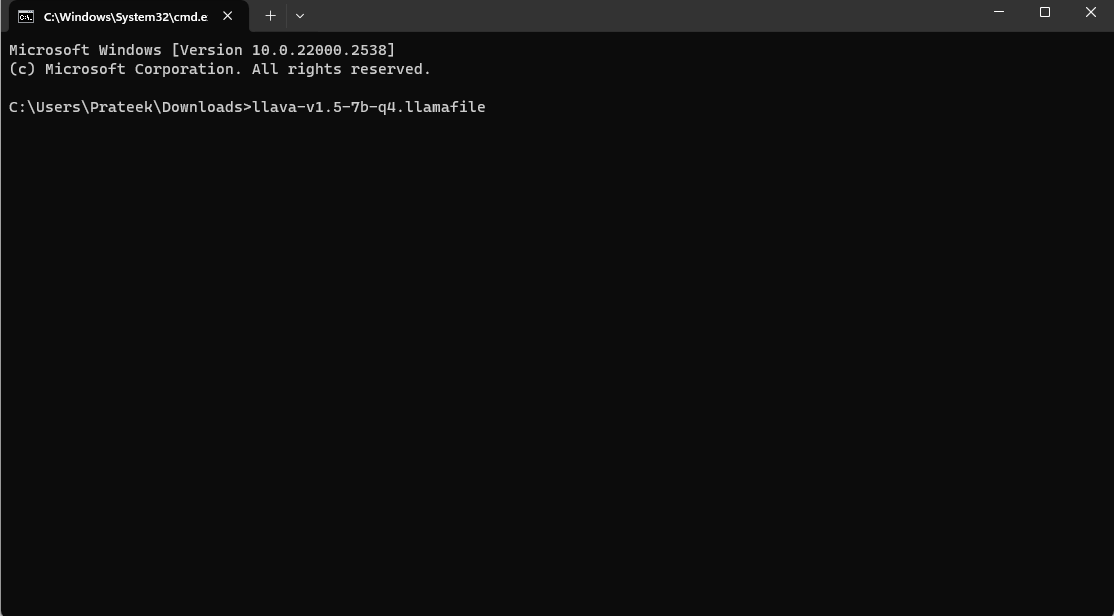

Open the terminal in the same folder. Shift+right click > Open terminal. Make sure to open Command Prompt not PowerShell

Type in the llamefile name in the terminal

llava-v1.5-7b-q4.llamafile

This should open a chat interface in the browser. Else go to

http://127.0.0.1:8080/in your browser.Start interacting with the Chatbot

Type Ctrl+C on the terminal to close llamafile

Thoughts

The model itself is not very good. It hallucinates a lot more than ChatGPT or Gemini from my testing. I primarily asked about well known information from my field of research. But it's good for generating stories, poetry, captions for photos, and other similar things.